Sign up to receive quotes

What is Deepfake technology? What are the risks of Deepfake technology?

Deepfake is a technology that uses artificial intelligence to create images, videos, or audio with such high realism that it is difficult to distinguish from authentic content.

While it has positive applications in entertainment, media, or research, deepfake also poses risks, particularly when misused to fabricate statements or actions of celebrities and politicians, leading to information chaos.

Read on to dive deeper into this technology and gain some practical tips to stay vigilant against the risks of deepfake presents.

I. What is Deepfake?

The term Deepfake originates from "Deep Learning" and "Fake," referring to images, videos, or audio that have been edited or generated using artificial intelligence, AI-based tools, or AV editing software.

By leveraging artificial neural networks and advanced machine learning algorithms, deepfake can simulate human actions, voices, and appearances with astonishing realism.

Although deepfake can be used legitimately in entertainment and education, its potential for misuse raises serious ethical, legal, and cybersecurity concerns.

II. How does Deepfake work?

Many people mistakenly believe deepfake is merely edited or photoshopped video or images.

Creating a deepfake often involves the use of Generative Adversarial Networks (GANs), a type of neural network architecture consisting of two main components: the generator and the discriminator.

- Generator: The generator is responsible for creating synthetic content. It takes input data (e.g., images, audio clips) and produces fake content that mimics the characteristics of the input data.

For example, a generator trained on a dataset of human faces can create highly realistic fake faces.

- Discriminator: The discriminator’s role is to evaluate the content produced by the generator. It attempts to distinguish between real and fake content.

The discriminator provides feedback to the generator, indicating how closely the generated content resembles authentic data.

When creating deepfake images, the GAN system analyzes images of the target from multiple angles to capture all details and perspectives.

When creating deepfake videos, GANs analyze videos from various angles, studying behavior, movements, and speech patterns.

III. Technologies behind the development of Deepfake?

The rise of deepfake is not a coincidence. Behind the nearly indistinguishable fake videos lies a combination of cutting-edge artificial intelligence technologies.

Below are the core technologies driving the rapid development of deepfake:

Generative Adversarial Networks (GANs)

GANs are the “heart” of deepfake. They operate through a continuous testing mechanism between two neural networks: one generates fake images/videos, while the other attempts to detect fakes. This competition results in increasingly realistic outputs that are hard to differentiate.

Convolutional Neural Networks (CNNs)

CNNs analyze and recognize facial features and movements in image/video data. Acting as the “sharp eyes” of deepfake, this technology enables precise replication of human expressions.

Autoencoders

Autoencoders capture key features of faces and bodies (e.g., expressions, movements) and “map” them onto a source video. This allows a face to be “swapped” while maintaining natural emotions and motions.

Natural Language Processing (NLP)

NLP enables the creation of deepfake voices. It collects audio data, analyzing tone, speech speed, and intonation to recreate a voice that mirrors the target’s characteristics.

High Performance Computing (HPC)

Deepfake requires processing millions of data points for images, audio, and movements, made possible by powerful GPUs and cloud computing platforms.

AI-Integrated Video Editing Software

To enhance smoothness and realism, modern video editing software incorporates AI to refine colors, lighting, facial contours, and even sync lip movements with dialogue.

IV. Benefits and dangers of Deepfake

4.1 Benefits of Deepfake

Deepfake, in itself, is not inherently bad; rather, it is a fascinating technology. It supports applications in entertainment, education, and more.

In entertainment: Deepfake opens new opportunities in the film, music, and gaming industries. It creates humorous videos, parodies, and short films featuring famous figures, engaging and entertaining audiences.

- Bringing historical figures into interactive videos for unprecedented experiences.

- Personalizing content for users in advertisements or gaming experiences.

- Recreating deceased actors in films to complete narratives without disrupting emotional continuity.

In Education: Deepfake can assist students by:

- Enabling “direct” interactions with historical figures or experts through vivid voice and image simulations.

- Offering personalized lessons with virtual teachers speaking local languages, tailored to specific student groups.

In Media & Marketing: Creating content quickly and flexibly without the need for live filming or recording. Personalizing ads with customized visuals, dialogues, and voices for target audiences.

In Cultural & Historical Preservation: Reconstructing images of historical figures or events to help younger generations understand the past. Reviving ancient languages and voices to preserve intangible cultural heritage.

4.2 Risks of Deepfake

Despite its positive potential, deepfakes are increasingly being used as a tool for illicit activities.

The difficulty in identifying deepfake images and videos makes online users vulnerable to misinformation spread through this technology.

Risks of Deepfake:

- Financial fraud and identity theft

Criminals use deepfakes to impersonate the voices or images of acquaintances, such as friends, family, or company executives, to request money transfers. Victims, unaware of the deception, may lose hundreds of millions or even billions of dong. According to the FBI, online fraud losses in the U.S. reached $10.3 billion in 2022, nearly double that of 2021.

- Extortion with fabricated sensitive content

Alongside altering footage and creating fake news stories, deepfakes can be used for defamation, slander, or manipulating public opinion on specific issues. Malicious actors use deepfake images or videos for extortion, threatening to release fabricated content that damages victims’ reputations. Beyond personal harm, deepfakes can spread pornographic content, leading to unpredictable and hard-to-control consequences.

In 2024, deepfake videos featuring pop star Taylor Swift surfaced, depicting her in fabricated, misleading scenarios, highlighting the vulnerability of celebrities to reputational and privacy damage.

- Corporate security and reputational risks

Fake videos of executives issuing fraudulent orders or controversial statements can cause stock price crashes, significant reputational damage, and severe cyberattacks on corporate security systems.

Example of political manipulation involving president Biden

In early 2024, a leaked deepfake audio of President Biden gave the impression he was making controversial national security statements. This incident exemplifies deepfake’s potential to mislead the public and cause confusion during election years, raising concerns about the integrity of political discourse.

- Deception of surveillance and authentication systems: Deepfakes can bypass facial or voice recognition systems for KYC verification, rendering automated security mechanisms vulnerable to exploitation.

- Erosion of Social trust: Deepfake blurs the line between real and fake, causing confusion and skepticism about all content. This leads to the “liar’s dividend,” where even authentic videos are dismissed as fake.

V. Detecting and mitigating Deepfake risks

As deepfake evolves from a technological novelty to a genuine threat, organizations must proactively implement intelligent, multi-layered defense solutions.

- AI & machine learning

Modern detection tools use AI and machine learning to scrutinize every frame and audio segment, identifying discrepancies invisible to the human eye.

- Blockchain Integration

Emerging solutions use blockchain to verify content origins and ensure data integrity, enhancing trust in authentication processes.

To comprehensively protect organizations, combining technology with proactive defense strategies is essential:

- Multi-Layer Authentication: Enhance security by integrating biometrics, user behavior analysis, and strong passwords.

Tip: Use secure password generators to avoid risks from weak password habits.

- Staff Training: Build a culture of deepfake awareness by equipping employees with knowledge to recognize manipulated content in images, videos, and audio.

- Standardized Verification Processes: For critical financial or internal communications, implement rigorous verification protocols beyond relying on sight and sound.

- Digital Signatures & Watermarking: Apply watermarks and digital signatures to original content to verify authenticity and prevent tampering.

As technology advances faster than human perception, understanding the nature and risks of deepfake is no longer optional but a necessity.

VR360 hopes this overview of What is Deepfake Technology? and The Risks of Deepfake Technology helps you grasp the potential dangers and equips you to proactively detect and respond to manipulated content scenarios caused by deepfake.

Related Article:

Sora by OpenAI – The AI that generates short videos from text and is making waves

LIÊN HỆ HỢP TÁC CÙNG VR360

VR360 – ĐỔI MỚI ĐỂ KHÁC BIỆT

- Facebook: https://www.facebook.com/vr360vnvirtualtour/

- Hotline: 0935 690 369

- Email: infor@vr360.com.vn

- Địa chỉ:

- 123 Phạm Huy Thông, Sơn Trà, Đà Nẵng

- Toà nhà Citilight, số 45 Võ Thị Sáu, Đakao, Quận 1, TP Hồ Chí Minh

- 3B Đặng Thái Thân, Phan Chu Trinh, Hoàn Kiếm, Hà Nội

Table of content

Articles on the same topic

Những khó khăn trong chuyển đổi số cấp xã phường

05/02/2026

05/02/2026 Một số giải pháp trong công tác chuyển đổi số cấp xã, phường

26/01/2026

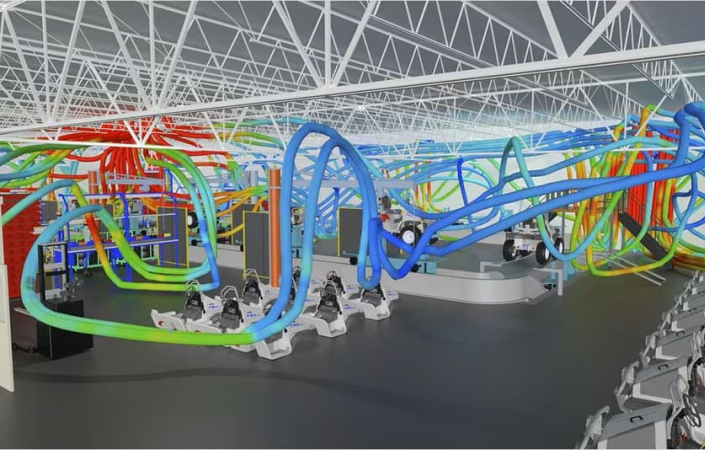

26/01/2026 Virtual Twin: Vai trò then chốt trong đổi mới thiết kế, chế tạo

23/01/2026

23/01/2026 So sánh Local AI và AI on Cloud: Giải pháp nào tốt nhất cho doanh nghiệp?

15/01/2026

15/01/2026 GRPO là gì? Thuật toán huấn luyện đằng sau DeepSeek

13/01/2026

13/01/2026 Chuyển đổi số cấp xã, phường: Nền tảng của chính quyền số

24/12/2025

24/12/2025 Local AI là gì? Ứng dụng và lợi ích khi triển khai

23/12/2025

23/12/2025 Sự khác biệt giữa AI Agent và AI Chatbot: Không chỉ nằm ở tên gọi

10/12/2025

10/12/2025 Thực tế ảo năm 2026: Dự báo xu hướng và cơ hội cho doanh nghiệp

09/12/2025

09/12/2025 Giải mã các loại thực tế ảo

21/11/2025

21/11/2025